After writing my last article on Getting NVIDIA NGC containers to work with VMware PVRDMA networks I had a couple of people ask me “How do I set up PVRDMA networking on vCenter?” These are the steps that I took to set up PVRDMA networking in my lab.

RDMA over Converged Ethernet (RoCE) is a network protocol that allows remote direct memory access (RDMA) over an Ethernet network. It works by encapsulating an Infiniband (IB) transport packet and sending it over Ethernet. If you’re working with network applications that require high bandwidth and low latency, RDMA will give you lower latency, higher bandwidth, and a lower CPU load than an API such as Berkeley sockets.

Full disclosure: I used to work for a startup called Bitfusion, and that startup was bought by VMware, so I now work for VMware. At Bitfusion we developed a technology for accessing hardware accelerators, such as NVIDIA GPUs, remotely across networks using TCP/IP, Infiniband, and PVRDMA. I still work on the Bitfusion product at VMware, and spend a lot of my time getting AI and ML workloads to work across networks on virtualized GPUs.

In my lab I’m using Mellanox Connect/X5 and ConnectX/6 cards on hosts that are running ESXi 7.0.2 and vCenter 7.0.2. The cards are connected to a Mellanox Onyx MSN2700 100GbE switch.

Since I’m working with Ubuntu 18.04 and 20.04 virtual machines (VMs) in a vCenter environment, I have a couple of options for high-speed networking:

- I can use PCI passthrough to pass the PCI network card directly through to the VM and use the network card’s native drivers on the VM to set up a networking stack. However this means that my network card is only available to a single VM on the host, and can’t be shared between VMs. It also breaks vMotion (the ability to live-migrate the VM to another host) since the VM is tied to a specific piece of hardware on a specific host. I’ve set this up in my lab but stopped doing this because of the lack of flexibility and because we couldn’t identify any performance difference compared to SR-IOV networking.

- I can use SR-IOV and Network Virtual Functions (NVFs) to make the single card appear as if it’s multiple network cards with multiple PCI addresses, pass those through to the VM, and use the network card’s native drivers on the VM to set up a networking stack. I’ve set this up in my lab as well. I can share a single card between multiple VMs and the performance is similar to PCI passthough. The disadvantages are that setting up SR-IOV and configuring the NVFs is specific to a card’s model and manufacturer, so what works in my lab might not work in someone else’s environment.

- I can set up PVRDMA networking and use the PVRDMA driver that comes with Ubuntu. This is what I’m going to show how to do in this article.

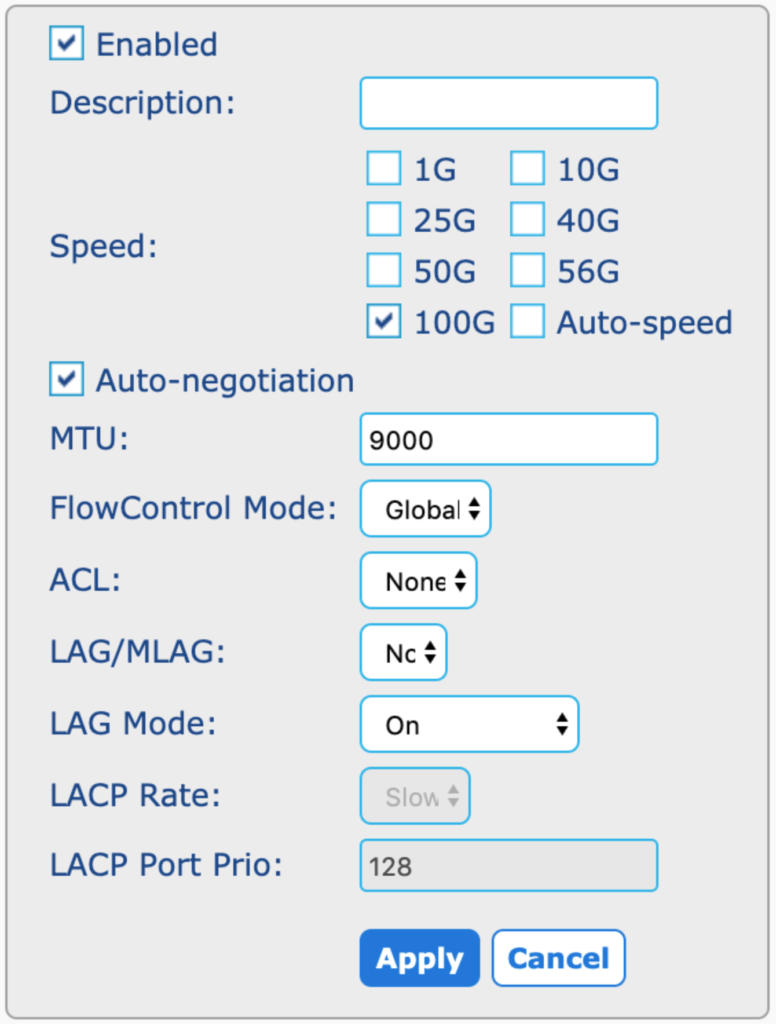

Set up your physical switch

First, make sure that your switch is set up correctly. On my Mellanox Onyx MSN2700 100GbE switch that means:

- Enable the ports you’re connecting to.

- Set the speed of each port to 100G.

- Set auto-negotiation for each link.

- MTU: 9000

- Flowcontrol Mode: Global

- LAG/MLAG: No

- LAG Mode: On

Set up your virtual switch

vCenter supports Paravirtual RDMA (PVRDMA) networking using Distributed Virtual Switches (DVS). This means you’re setting up a virtual switch in vCenter and you’ll connect your VMs to this virtual switch.

In vCenter navigate to Hosts and Clusters, then click the DataCenter icon (looks like a sphere or globe with a line under it). Find the cluster you want to add the virtual switch to, right click on the cluster and select Distributed Switch > New Distributed Switch.

- Name: “rdma-dvs”

- Version: 7.0.2 – ESXi 7.0.2 and later

- Number of uplinks: 4

- Network I/O control: Disabled

- Default port group: Create

- Port Group Name: “VM 100GbE Network”

Figure out which NIC is the right NIC

- Go to Hosts and Clusters

- Select the host

- Click the Configure tab, then Networking > Physical adapters

- Note which NIC is the 100GbE NIC for each host

Add Hosts to the Distributed Virtual Switch

- Go to Hosts and Clusters

- Click the DataCenter icon

- Select the Networks top tab and the Distributed Switches sub-tab

- Right click “rdma-dvs”

- Click “Add and Manage Hosts”

- Select “Add Hosts”

- Select the hosts. Use “auto” for uplinks.

- Select the physical adapters based on the list you created in the previous step, or find the Mellanox card in the list and add it. If more than one is listed, look for the card that’s “connected”.

- Manage VMkernel adapters (accept defaults)

- Migrate virtual machine networking (none)

Tag a vmknic for PVRDMA

- Select an ESXi host and go to the Configure tab

- Go to System > Advanced System Settings

- Click Edit

- Filter on “PVRDMA”

- Set

Net.PVRDMAVmknic = "vmk0"

Repeat for each ESXi host.

Set up the firewall for PVRDMA

- Select an ESXi host and go to the Configure tab

- Go to System > Firewall

- Click Edit

- Scroll down to find

pvrdmaand check the box to allow PVRDMA traffic through the firewall.

Repeat for each ESXi host.

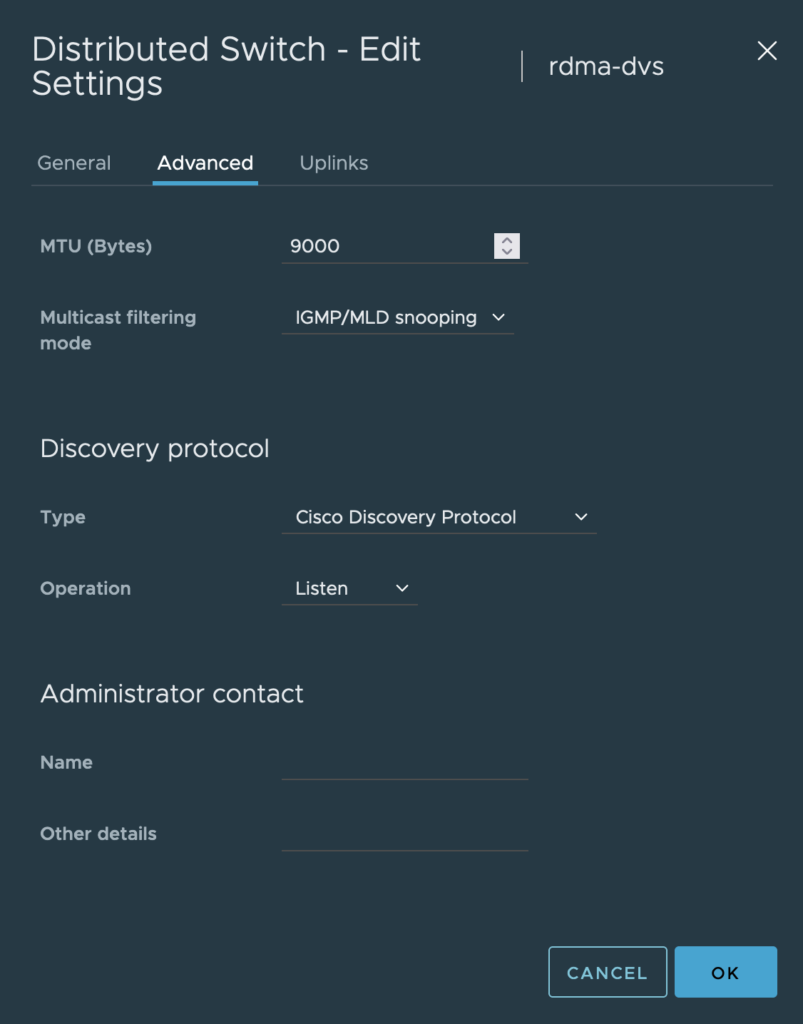

Set up Jumbo Frames for PVRDMA

To enable jumbo frames a vCenter cluster using virtual switches you have to set MTU 9000 on the Distributed Virtual Switch.

- Click the Data Center icon.

- Click the Distributed Virtual Switch that you want to set up, “rdma-dvs” in this example.

- Go to the Configure tab.

- Select Settings > Properties.

- Look at Properties > Advanced > MTU. This should be set to 9000. If it’s not, click Edit.

- Click Advanced.

- Set MTU to 9000.

- Click OK.

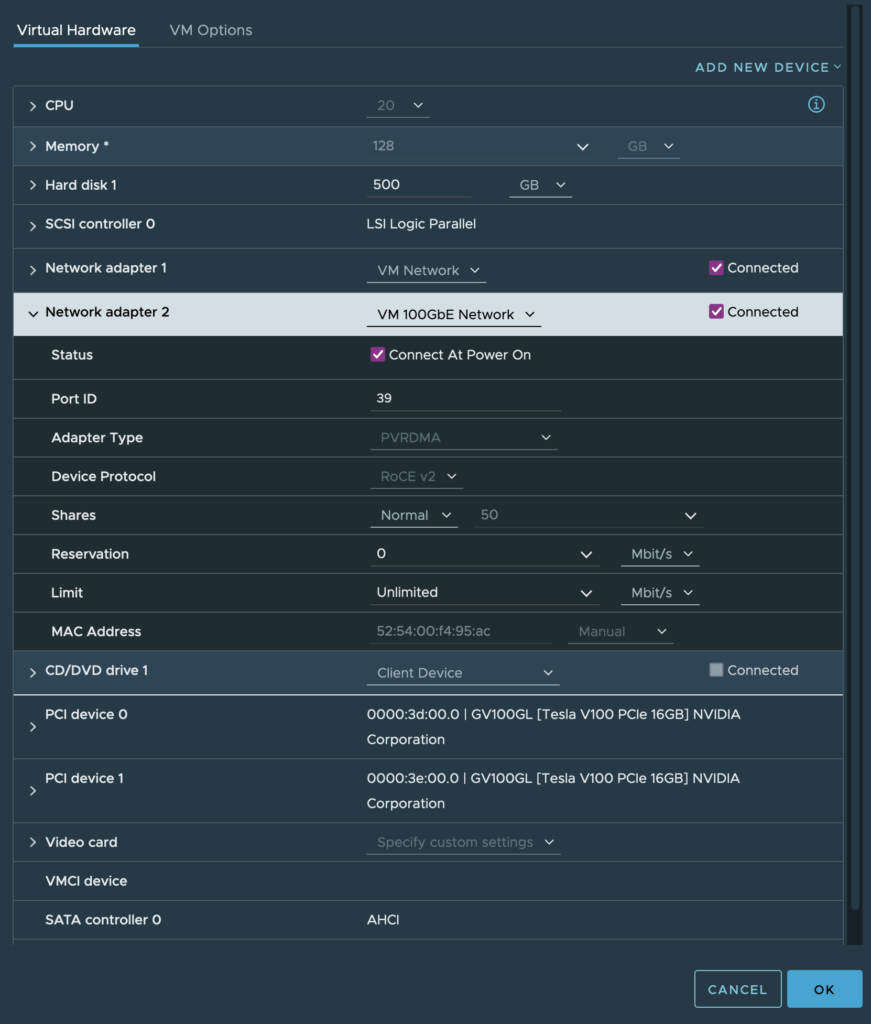

Add a PVRDMA NIC to a VM

- Edit the VM settings

- Add a new device

- Select “Network Adapter”

- Pick “VM 100GbE Network” for the network.

- Connect at Power On (checked)

- Adapter type PVRDMA (very important!)

- Device Protocol: RoCE v2

Configure the VM

For Ubuntu:

sudo apt-get install rdma-core infiniband-diags ibverbs-utilsTweak the module load order

In order for RDMA to work the vmw_pvrdma module has to be loaded after several other modules. Maybe someone else knows a better way to do this, but the method that I got to work was adding a script /usr/local/sbin/rdma-modules.sh to ensure that Infiniband modules are loaded on boot, then calling that from /etc/rc.local so it gets executed at boot time.

#!/bin/bash

# rdma-modules.sh

# modules that need to be loaded for PVRDMA to work

/sbin/modprobe mlx4_ib

/sbin/modprobe ib_umad

/sbin/modprobe rdma_cm

/sbin/modprobe rdma_ucm

# Once those are loaded, reload the vmw_pvrdma module

/sbin/modprobe -r vmw_pvrdma

/sbin/modprobe vmw_pvrdmaOnce that’s done just set up the PVRDMA network interface the same as any other network interface.

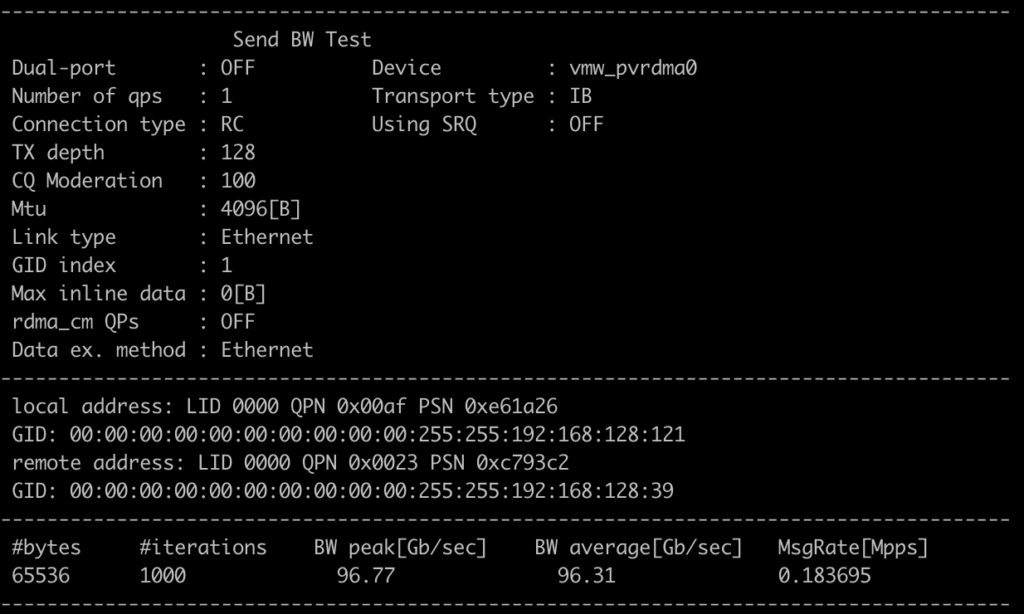

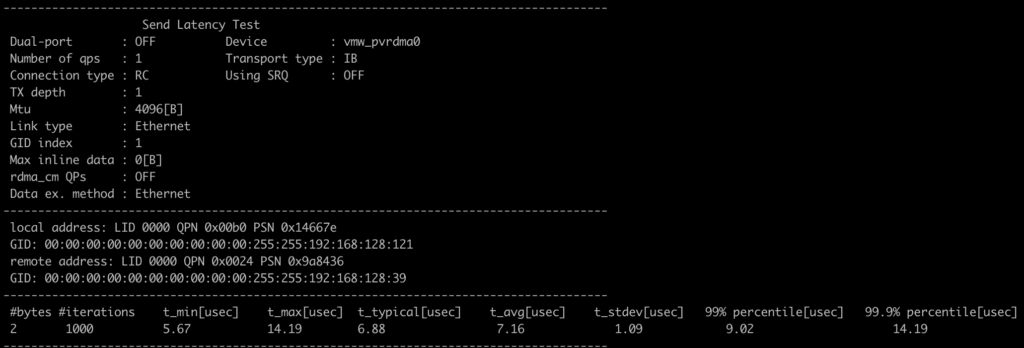

Testing the network

To verify that I’m getting something close to 100Gbps on the network I use the perftest package.

To test bandwith I pick two VMs on different hosts. On one VM I run:

$ ib_send_bw --report_gbitsOn the other VM I run the same command plus I add the IP address of the PVRDMA interface on the first machine:

$ ib_send_bw --report_gbits 192.168.128.39That sends a bunch of data across the network and reports back:

So I’m getting an average of 96.31Gbps over the network connection.

I can also check the latency using the ib_send_lat:

Hope you find this useful.

really usefull! but i have a question: Can I also use Mellanox ConnectX-5 VPI 100GbE / EDR Socket Direct Cards with Host Chaining to perform RDMA (RoCEv2) directly between 2 Hosts (without the need of a switch?)

I’ve done that with Mellanox ConnectX-4, ConnectX-5, and ConnectX-6 cards and it’s worked, but not the specific card you’re talking about. Seems like that should work OK.

Ok i tried all drivers available for VPI (4.19 and 4.21) but no ping between 2 direct connect host. When I passthrough the VPI (dynamic pci passthrough) to Ubuntu, I get ping and iperf… I don’t understand it. Seems VPI only works with OFED well, with NATIVE ESXi drivers seems not to work but documentation says it should…

VMware writes in their VSAN howto, that uaing esxi ofed drivers are mandatory for Any RDMA/2 iSer and whatnot to Work. Sam Info in the old mellanox Driver Page on which Driver to Pick from their list

I’m not sure what page you’re looking at that says that. The OFED drivers are not required for ESXi or guest VMs or vSAN. The Linux

rdma-corepackage is all you need on a Linux guest VM, and there are VIBs that ship with ESXi that handle all of the Connect/X cards.Thanks for your sharing!! if build VM linux64 with ZFS(nvme raid) in esxi & enable iser(iscsi via RDMA). whether it can be taken as hi-performance storage for Esxi host .

I’m not sure. I haven’t tried running iSCSI over RDMA. I’ll make a note to try that and find out.

What did you find out about iSER on ESXi?

How did you activate it?

Pingback: Getting NVIDIA NGC containers to work with VMware PVRDMA networks | Earl C. Ruby III

Hi, great tutorial! I’m still however wondering whether the !00GbE physical adapter should be dedicated solely to PVRDMA use, or whether it can serve simultaneously also the other “regular” network traffic purposes. In other words, should I have additional physical adapters on the hosts for vmotion, regular vm networking, etc.?

I usually set up multiple virtual switches on separate VLANs on the 100GbE connection, and assign them to vMotion, vSAN, or for anything else that I need a segregated network for. You can also implement traffic shaping by vLAN, so if you don’t want vMotion to suck up all of your bandwidth you can rate-limit the vMotion VLAN. You just have to make sure that your physical switch is configured for the VLAN IDs that you’re using on the 100GbE port, but other than that setting up additional virtual switches is easy.