Full disclosure: I used to work for a startup called Bitfusion, and that startup was bought by VMware, so I now work for VMware. At Bitfusion we developed a technology for accessing hardware accelerators, such as NVIDIA GPUs, remotely across networks using TCP/IP, Infiniband, and PVRDMA. Although I still do some work on the Bitfusion product at VMware, I spend most of my time these days seeing what I can do on the vSphere platform using the latest AI/ML accelerator hardware from NVIDIA, Intel, and AMD.

Although I work at VMware, this is my own personal blog, and any views, opinions, or mistakes I publish here are purely my own and are not official views or recommendations from VMware.

This specific article is based on a talk I just gave at VMware Explore Las Vegas.

Everyone wants the latest, greatest GPUs for AI/ML training and inference workloads. As I’m sure most of you know, GPUs are just specialized matrix processors. They can quickly perform mathematical operations — in parallel — on matrices of numbers. Although GPUs were originally designed for graphics, it turns out that being able to do matrix math is extremely useful for AI/ML.

Unfortunately, every GPU vendor on the planet seems to be having about a one year order backlog when it comes to shipping datacenter-class GPUs. If you’re having a hard time buying GPUs, one thing you can do to increase the performance of your AI/ML workloads is to let the CPU’s AMX instructions do some of that AI/ML work, lessening the need for expensive and hard-to-procure GPUs.

Advanced Matrix Extensions (AMX) are a new set of instructions available on x86 CPUs. These instructions are designed to work on matrices to accelerate artificial intelligence and machine learning -related workloads. These instructions are beginning to blur the lines between CPUs and GPUs when it comes to machine learning applications.

When I started hearing that Intel Sapphire Rapids CPUs were embedding matrix operations in the CPU’s instruction set I started wondering what can I do with those instructions using AI/ML tools?

“We can do good inference on Skylake, we added instructions in Cooper Lake, Ice Lake, and Cascade Lake. But AMX is a big leap, including for training.”

— Bob Valentine, the processor architect for Sapphire Rapids

.

As you replace older hosts with Sapphire Rapids -based hosts you not only get performance improvements for traditional computing, you also get AMX capabilities for AI/ML workloads. You can execute diverse AI & non-AI multi-tenant workloads side by side in a virtualized environment. You have the flexibility to repurpose the IT infrastructure for AI and non-AI use cases as demand changes without additional capex. The ubiquity of Intel Xeon & vSphere in on-Prem and cloud environments, combined with an optimized AI software stack, allows you to quickly scale the compute in hybrid environments. You can run your entire end to end AI pipeline — data prep, training, optimization, inference – using CPUs with built-in AI acceleration.

Does this really work? What kind of workloads can I run?

Here’s a demo I did using an llm-foundry LLM with a 7B parameter model from HuggingFace. The code is installed in a container and the model is loaded in a Kubernetes volume. I first start the LLM in a Tanzu cluster on an Ice Lake CPU -based system with no GPUs. As you can see it takes a while just to load the model into memory, then when it starts it’s pretty jerky and slow.

I start the same exact container on Tanzu cluster running on a Sapphire Rapids CPU -based system with no GPUs. The hardware is roughly equivalent (both are using what would be considered mid-range servers at the time they were purchased), the VMs are equivalent in memory and vCPUs, but the Sapphire Rapids system runs much faster than the previous generation Ice Lake system.

In addition to the above side-by-side comparison of an LLM running on Ice Lake vs Sapphire Rapids, we also fine-tuned an LLM using just Sapphire Rapids CPUs. Starting with an off-the-shelf LLAMA2-7B model, we fine-tuned it with a dataset “Finance-Alpaca” of about 17,500 queries. We used cnvrg.io to manage the AI pipeline and Pytorch distributed fine-tuning. It took about 3.5 hours to complete on a 4 VM Tanzu cluster with Sapphire Rapids Xeon 4 hardware.

Once the model was fine-tuned with financial data we ran 3 chatbots on a single host. Now that the model was fine-tuned we could ask it questions such as “What is IRR?”, “What in NPV?”, “What is the difference between IRR and NPV?” and get correct and detailed answers back from the LLM.

We just took an off-the-shelf LLM, fine-tuned it with financial services information in about 3.5 hours, and now we have a chatbot that can answer basic questions about finance and financial terms. No GPUs were used to do any of this.

You may not want to run every ML workload you have on just CPUs, but there are a lot of them that you can run on just CPUs. Workloads will run even faster with GPUs, but you may not want to pay for GPUs for every workload you run if the speed of a CPU is good enough.

vSphere Requirements for using AMX

If you want to try this in your vSphere environment this is what you’ll need:

- Hardware with Sapphire Rapids CPUs.

- Guest VMs running Linux kernel 5.16 or later. Kernel 5.19 or later recommended.

- Guest VMs using HW version 20 (ESXI 8.0u1, vCenter 8.0u1).

- If you’re running Kubernetes, your worker nodes will also need to run Linux kernel 5.16 or later.

Hardware

Obviously you need hardware that supports AMX if you want to use AMX. I’m using Intel Sapphire Rapids Xeon4 CPUs. The hosts have motherboards that support DDR5 memory and PCIE5. In my lab I’m currently testing with Dell R760, Dell R660, and Supermicro SYS-421GE-TNRT servers.

Linux Kernel 5.16 or later

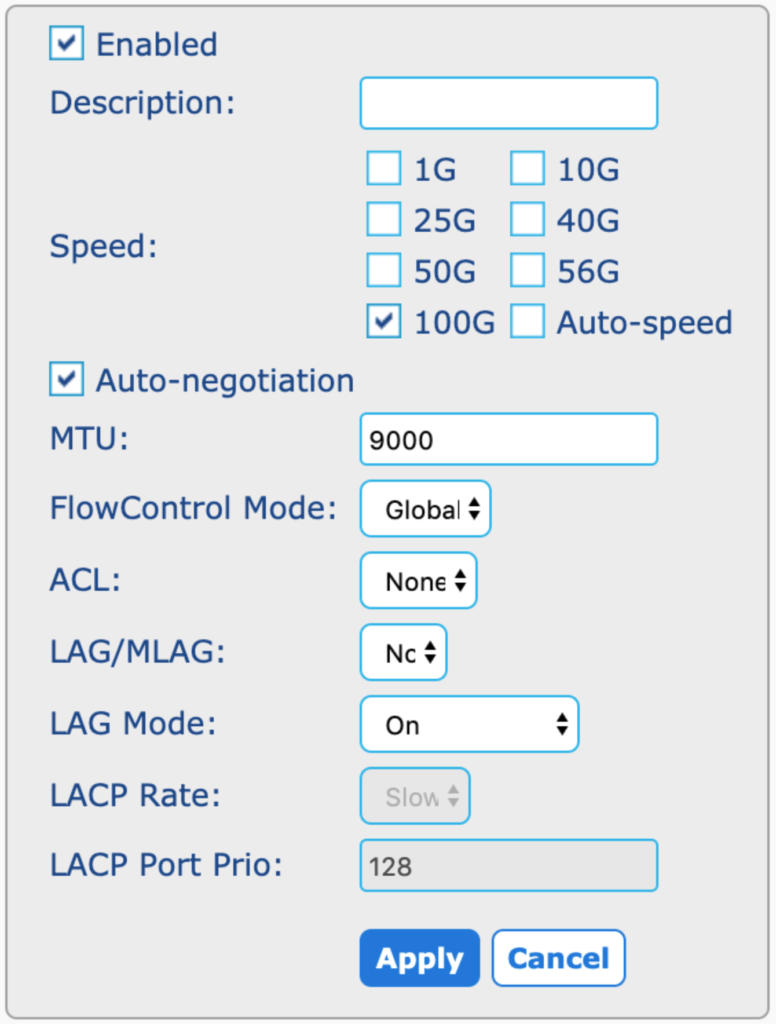

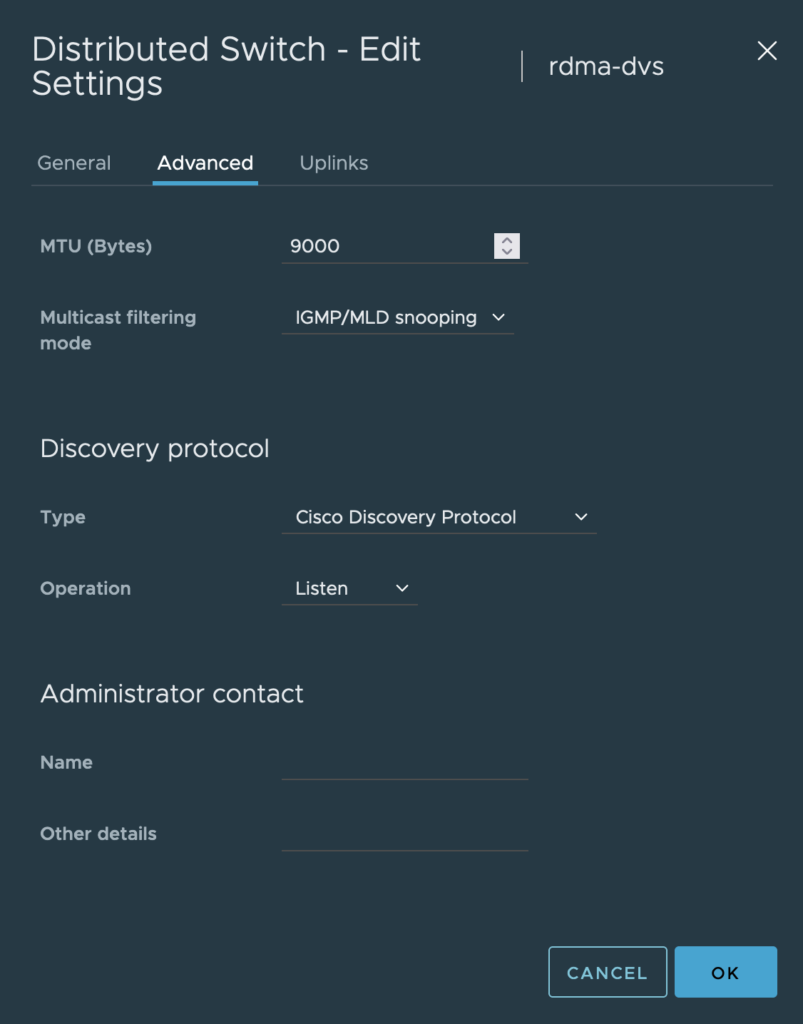

Support for AMX was added to the Linux 5.16 kernel, so if you want to use AMX you’ll need to use 5.16 or a later kernel. In my tests for guest VMs I tried Ubuntu 22.04 images with the 5.19 kernel and images using 6.2 kernels, both of which worked fine. Although Ubuntu 22.04 ships with a 5.15 kernel, the 6.2 kernel is available using the hardware enablement (HWE) kernel package that comes with 22.04. The HWE kernel can be installed with apt:

sudo apt update

sudo apt install \

--install-recommends \

linux-image-generic-hwe-22.04vSphere 8.0u1 and Hardware Version 20

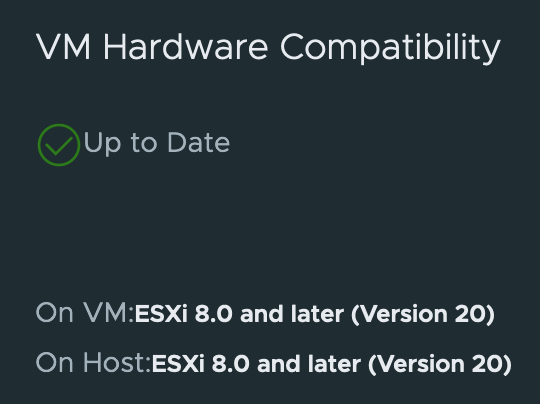

Which capabilities of the underlying hardware are virtualized in vSphere is determined by the hardware version (HW version) of the guest VM. The AMX instructions are virtualized in HW version 20, so if you want to access AMX instructions in vSphere you need to be using HW version 20 on your VMs.

To find out what HW version a VM is using, in vCenter go to the VM, click the Updates tab, and click the CHECK STATUS button.

HW version 20 is supported on ESXI 8.0u1. To run ESXI 8.0u1 you’ll need vCenter 8.0u1. If you’re still running vCenter 7 and you want to try this technology out I suggest that you upgrade to vCenter 8 as soon as you can, then start upgrading ESXI hosts to ESXI 8.

Once you have a Linux VM with a 5.19 kernel (or later) running HW version 20, any AI/ML framework that you run on that VM will have access to the hardware’s AMX instructions. If you run Docker on the VM any AI/ML containers that you run will be running on a the VM’s kernel and will have access to the hardware’s AMX instructions. If the version of the tools that you’re using were compiled to use AMX, they’ll now run faster using the matrix math capabilities of the Sapphire Rapids CPU — no GPUs necessary.

Tanzu Requirements for using AMX

The kernel requirement also applies to Tanzu worker nodes. Whatever kernel is installed on your worker nodes is the kernel that your Kubernetes pods use. To use AMX your Tanzu worker nodes need to be running kernel 5.16 or later.

Tanzu comes with a set of pre-built, automatically-updated node images called Tanzu Kubernetes Releases (TKRs). Each image is an OVA file that deploys a Kubernetes control node or a worker node. A node is just a Linux VM with a specific version of Kubernetes installed on it and a specific Linux kernel.

When installing Tanzu one of the steps is to set up a Content Library where TKRs are stored. The TKRs are automatically downloaded from VMware into the Content Library whenever new TKRs are released.

When you upgrade a Tanzu Kubernetes cluster, say from Kubernetes 1.23 to 1.24, the Tanzu Supervisor Cluster will create a new VM from 1.24 TKR image, wait for it to join the cluster, then it will evacuate, shut down, and delete one of your 1.23 nodes. The Supervisor Cluster repeats this over and over, first replacing your cluster’s control nodes, then replacing the cluster’s worker nodes, until all of the nodes in the cluster are running Kubernetes 1.24.

Note: Kubernetes should only be upgraded from one minor release to the next minor release. If you have a cluster running Kubernetes 1.20 and you want to upgrade to 1.24, you have to first upgrade to 1.21, then 1.22, then 1.23, and finally to 1.24. Skipping a minor version is not recommended and may break your cluster.

VMware publishes two different TKR images for each version of Kubernetes, one based on PhotonOS and one based on Ubuntu.

At this time VMware has not yet published a TKR with a 5.19 (or later) kernel. If you want to start using Sapphire Rapids AMX instructions and you want to use Tanzu Kubernetes, you have two choices:

- Wait for the official TKR from VMware with a 5.19 (or later) kernel.

- Build your own TKR using the Bring Your Own Image (BYOI) process.

UPDATE: VMware released a TKR on 2023-11-07 with a kernel that supports AMX.

You can read about how to install it here.

Bring Your Own Image (BYOI)

To build an image, follow the instructions on the Github page vSphere Tanzu Kubernetes Grid Image Builder. The process is fairly straightforward. The steps I followed were:

I cloned the repo with git clone:

$ git clone https://github.com/vmware-tanzu/vsphere-tanzu-kubernetes-grid-image-builder.gitI edited the packer-variables/vsphere.j2 file so it contained information about my vSphere environment. I also created a folder called “BYOI” under my cluster in vCenter and specified that folder in the config, so any “work in progress” images or VMs generated by the BYOI tool would be created in one place.

Make sure you put the correct values for your vSphere environment in the packer-variables/vsphere.j2 file. The first time I tried this I was using another group’s environment to build a TKR, I used the wrong network name, and I spent about 2 hours trying to figure out why the image was erroring out.

I ran make list-versions to get a list of the available versions:

$ make list-versions

Kubernetes Version | Supported OS

v1.24.9+vmware.1 | [photon-3,ubuntu-2004-efi]

v1.25.7+vmware.3-fips.1 | [photon-3,ubuntu-2004-efi]I am going to use v1.24.9+vmware.1, so I ran this to download the artifacts:

$ make run-artifacts-container KUBERNETES_VERSION=v1.24.9+vmware.1

Using default port for artifacts container 8081

Error: No such container: v1.24.9---vmware.1-artifacts-server

Unable to find image 'projects.registry.vmware.com/tkg/tkg-vsphere-linux-resource-bundle:v1.24.9_vmware.1-tkg.1' locally

v1.24.9_vmware.1-tkg.1: Pulling from tkg/tkg-vsphere-linux-resource-bundle

2731d8df91a4: Pull complete

73c864854baf: Pull complete

08eb7dea6abf: Pull complete

52654f918c81: Pull complete

da27b4bff06e: Pull complete

797512e2c717: Pull complete

0a994466e4a6: Pull complete

31d1a74dbc07: Pull complete

b3444fea81b1: Pull complete

193c65bff1b1: Pull complete

Digest: sha256:9dcec246657fa7cf5ece1feab6164e200c9bc82b359471bbdec197d028b8e577

Status: Downloaded newer image for projects.registry.vmware.com/tkg/tkg-vsphere-linux-resource-bundle:v1.24.9_vmware.1-tkg.1

26a10c7dea32e04b07e6de760982253b5044ab5a06d1330fef52c5463f19e26cCustomize the TKR OVA Image

The last step is to build the TKR OVA file, but before I build it I want to add two customizations. I need to need to use VM Hardware version (aka “VMX version”) 20 for the OVA, and I need to make sure that we build an Ubuntu OVA with a kernel >= 5.16.

The Github README docs have examples of how to customize the OVA. The first example shows how to change the HW version, and the second one shows how to add new OS packages. Reading those two examples tells me what I need to do.

Use HW Version 20 for the Image

I edit the packer-variables/default-args.j2 file and change the vmx_version:

"vmx_version": "20",Install a Kernel >= 5.16 on the Image

Earlier when I ran make list-versions I noticed that the v1.24.9+vmware.1 Kubernetes version supports Ubuntu 20.04. However, the only way to get a packaged kernel >= 5.16 installed is to install the Ubuntu 22.04 linux-image-generic-hwe-22.04 package, and vsphere-tanzu-kubernetes-grid-image-builder does not currently have a base image for 22.04.

Since I need 22.04, and 20.04 is the only version available, I’m going to force Packer to do a release upgrade before generating the OVA. To do that I’m going to install the jammy-updates repo from 22.04. When I do that, the vSphere Tanzu Kubernetes Grid Image Builder will cause Packer to upgrade the image to Ubuntu 22.04 and I can then install the Ubuntu 22.04 linux-image-generic-hwe-22.04 package.

Following the instructions from Adding new OS packages and configuring the repositories or sources:

I create a directory repos under ansible/files/

I create a file ansible/files/repos/ubuntu.list which contains the lines:

deb http://us.archive.ubuntu.com/ubuntu/ jammy-updates main restricted

deb http://security.ubuntu.com/ubuntu jammy-security main restricted

deb http://us.archive.ubuntu.com/ubuntu/ jammy main restrictedI create the file packer-variables/repos.j2 which contains:

{

{% if os_type == "photon-3" %}

"extra_repos": "/image-builder/images/capi/image/ansible/files/repos/photon.repo"

{% elif os_type == "ubuntu-2004-efi" %}

"extra_repos": "/image-builder/images/capi/image/ansible/files/repos/ubuntu.list"

{% endif %}

} Doing all of that will add the jammy-updates repo to the TKR image. Now to add the kernel package I go back to the same packer-variables/default-args.j2 file we were editing earlier, I look for the extra_debs line and add the HWE kernel package for Ubuntu 22.04, linux-image-generic-hwe-22.04:

"extra_debs": "unzip iptables-persistent nfs-common linux-image-generic-hwe-22.04",Now that I’ve made those changes I can build the TKR OVA.

Build the Image

The main Github README page says I can run make build-node-image to build the OVA, but I want to use a specific version of Kubernetes and I want to use Ubuntu 20.04, so I assume I need to pass some extra parameters to make. Typing make help gives me all of the information I need to construct the right build command:

IP=[my VM's IP address, where the artifact container is running]

make build-node-image \

OS_TARGET=ubuntu-2004-efi \

KUBERNETES_VERSION=v1.24.9+vmware.1 \

TKR_SUFFIX=spr \

HOST_IP=$IP \

IMAGE_ARTIFACTS_PATH=${HOME}/imageThis takes a while to run and will create and configure a VM on your vSphere cluster that will be used to create the TKR OVA image. If you want to watch the build, run the docker logs command that make build-node-image spits out:

docker logs -f v1.24.9---vmware.1-ubuntu-2004-efi-image-builderWhen the process is done you should have an image file named ${HOME}/image/ovas/ubuntu-2004-amd64-v1.24.9---vmware.1-spr.ova

Add the Image to a local Content Library

In order for Tanzu to be able to use the image it has to be added to a local content library. If you don’t have a local content library create one by going to vSphere Client > Content Libraries > Create.

Once you’ve created the library click the library name to pull it up on the screen and click Actions > Import Item. Upload the ubuntu-2004-amd64-v1.24.9---vmware.1-spr.ova file.

Associate the Content Library with the Cluster Namespace

Go to vSphere Client > Workload Management > “your cluster namespace”, then click MANAGE CONTENT LIBRARIES on the VM Service tile. Make sure that the local library, and any other libraries used by your Cluster Namespace, are checked.

Deploy Your Own Image

To create a Kubernetes cluster you create a YAML file and run kubectl on in. The following YAML file builds a cluster based on the ubuntu-2004-amd64-v1.24.9---vmware.1-spr.ova TKR image, which is based on Ubuntu 20.04 and contains Kubernetes 1.24.9 and a Linux HWE kernel (currently kernel 6.2).

apiVersion: run.tanzu.vmware.com/v1alpha3

kind: TanzuKubernetesCluster

metadata:

name: my-tanzu-kubernetes-cluster-name

namespace: my-tanzu-kubernetes-cluster-namespace

annotations:

run.tanzu.vmware.com/resolve-os-image: os-name=ubuntu

spec:

topology:

controlPlane:

replicas: 3

vmClass: guaranteed-small

storageClass: vsan-default-storage-policy

tkr:

reference:

name: v1.24.9---vmware.1-spr

nodePools:

- name: worker

replicas: 3

vmClass: guaranteed-8xlarge

storageClass: vsan-default-storage-policy

volumes:

- name: containerd

mountPath: /var/lib/containerd

capacity:

storage: 160Gi

tkr:

reference:

name: v1.24.9---vmware.1-sprA couple of notes on this YAML file:

- For a stable, easily-upgradable cluster I recommend a minimum of 3 control plane nodes and 3 worker nodes.

- The

metadatasection’sannotationsline must be present to use an Ubuntu TKR as the base image. - The TKR

referencejust refers to the first part of the TKR’s file name. You can see the TKR file names by looking in the vCenter Content Library you set up for Tanzu. To get a list of validreferencenames:kubectl config use-context $my-tanzu-kubernetes-cluster-namespace

kubectl get tanzukubernetesreleases

Only the names that have READY=True and COMPATIBLE=True can be used to deploy a cluster. - In order to allocate a separate, larger volume for storing docker images on the worker nodes I added a

volumessection. I have a storage class defined namedvsan-default-storage-policyand thevolumessection will allocate a 160GiB volume using the disk specified byvsan-default-storage-policyand mount it on the worker node using the path/var/lib/containerd, which is where container images are stored. Changevsan-default-storage-policyto the name of a storage policy defined for yourtanzu-kubernetes-cluster-namespaceif you want this to work on your system. - Since images are downloaded as needed, the

containerdvolume will be destroyed when a worker node is destroyed. It will be destroyed and recreated (empty) when a worker node is upgraded.

I recommend deploying a fresh cluster using this YAML file just so you can try it out and see how it works. Once you’ve deployed a new cluster any AI/ML containers that you run will be running on a 6.2 kernel and will have access to the hardware’s AMX instructions. If the version of the tools that you’re using were compiled to use AMX, they’ll now run faster using the matrix math capabilities of the Sapphire Rapids CPU — no GPUs necessary.

Upgrading an existing Tanzu Kubernetes cluster to the new TKR image

To upgrade an existing Tanzu Kubernetes 1.23 cluster to 1.24 using the new TKR image:

- Modify the existing 1.23 cluster’s YAML file to refer to the

v1.24.9---vmware.1-sprTKR image. - Make sure that the YAML file has the

annotationsline so the Supervisor will deploy an Ubuntu-based TKR.

Then run:

kubectl config use-context $my-tanzu-kubernetes-cluster-namespace

kubectl apply -f $my-yaml-filenameIf you can’t find your cluster’s YAML file you can also do this:

kubectl config use-context $my-tanzu-kubernetes-cluster-namespace

kubectl edit tanzukubernetescluster/$my-tanzu-kubernetes-cluster-nameThis will pull up a system editor (vim on my system) containing the cluster’s freshly-generated current YAML file. Make the changes and save the file. Any changes you make will be applied immediately when you save the file.

Check the deployed cluster VMs

You can ssh into a cluster’s VMs and check the kernel version running and verify that you can see the amx flags for the CPUs, indicating that the extra instructions are accessible. In vCenter find one of the cluster’s VMs and get the IP address. To get the ssh password:

kubectl config use-context my-tanzu-kubernetes-cluster-namespace

kubectl get secret \

my-tanzu-kubernetes-cluster-name-ssh-password \

-o jsonpath='{.data.ssh-passwordkey}' \

-n my-tanzu-kubernetes-cluster-namespace | base64 -d

ssh -o PubkeyAuthentication=no vmware-system-user@vm-ip-address

$ uname -a

Linux my-tanzu-kubernetes-cluster-name-02-twk2c-wzsjc 6.2.0-26-generic #26~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Thu Jul 13 16:27:29 UTC 2 x86_64 x86_64 x86_64 GNU/Linux

$ grep VERSION_ID /etc/os-release

VERSION_ID="22.04"

$ grep amx /proc/cpuinfo | head -1

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid tsc_known_freq pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single ssbd ibrs ibpb stibp ibrs_enhanced fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid avx512f avx512dq rdseed adx smap avx512ifma clflushopt clwb avx512cd sha_ni avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves avx_vnni avx512_bf16 wbnoinvd arat avx512vbmi umip pku ospke avx512_vbmi2 gfni vaes vpclmulqdq avx512_vnni avx512_bitalg avx512_vpopcntdq rdpid cldemote movdiri movdir64b fsrm md_clear serialize amx_bf16 avx512_fp16 amx_tile amx_int8 flush_l1d arch_capabilitiesWith these instructions you should now be able to create VMs and Kubernetes clusters that can access Sapphire Rapids AMX instructions. Any AI/ML framework that you run will have access to the hardware’s AMX instructions. If the version of the tools that you’re using were compiled to use AMX, they’ll now run faster using the matrix math capabilities of the Sapphire Rapids CPU — no GPUs necessary.

Hope you find this useful.