My 10+ year old Synology NAS failed recently. I tried replacing the power supply and replacing the CMOS battery but it would not come back to life, so I started searching around for a replacement.

I originally intended to buy another Synology NAS, but when I looked over the various models I came to the conclusion that Synology was not keeping up with the competition in terms of hardware. I could get a NAS from a competitor with faster networking, a faster CPU, and more RAM for about half the price. Also, many of the apps that I’d originally used on the Synology NAS were no longer being supported by Synology, and most of the new apps they’d added were Synology-branded apps with unknown provenance and unknown support status, not open-source apps that I could Google a fix for if I had a problem.

After looking at a few brands I picked a low-end 4-drive Terra Master, the F4-424. The F4-424 comes in 3 flavors, the F4-424, F4-424 Pro, and F4-424 Max. The 3 share the same chassis, with main difference between the models being the RAM, CPU, GPU, and NICs being used. I’m mostly using the NAS as backup storage for all of my home computers, plus test storage (Minio, IPFS) for various Kubernetes test clusters, so I didn’t need lots of RAM, a fast CPU, or 10GbE. With the low-end F4-424 I was still getting a 4-core Intel CPU with 8GB DDR5 RAM and 2x 2.5GbE NICs, which is more than adequate for my needs.

I’d read that the Terra Master OS (TOS) wasn’t great, but what sold me on the Terra Master is that it’s basically a low-power Intel PC, so if you want to install some other OS on it, like TrueNAS Scale, openmediavault, or UnRAID, you can. I also read that Terra Master had just released TOS 6, and that it was a huge improvement over previous releases.

I set up the Terra Master with 4x Western Digital 8TB “Red” NAS drives and 2x Western Digital 1TB “Black” NVMe drives. I used TOS 6 for a week, and I thought it was fine. It was actually fairly simple to set up and run, supported using the NVMe drives for R/W cache, supported Time Machine backups, iSCSI, SMB, and NFS. I had no issues with it.

But I wanted to try out TrueNAS Scale so I downloaded a copy of the ISO and burned it to a USB flash drive. The Terra Master has a single USB-A port on the back, so I connected a USB hub and plugged in the flash drive, a keyboard, and a mouse. (There are YouTube videos that say you need to take the NAS apart and install the flash drive in an internal USB slot. You do not need to do this.)

I rebooted the NAS and started hitting the DEL key when it first powered on to get into the BIOS. First I went to the Boot screen and disabled the “UTOS Boot First” setting. This is the setting that tells the NAS to boot TOS 6 from a 3.75GB internal flash drive.

Next I went to the Save & Exit screen and selected my USB drive as the Boot Override device. It booted up my USB flash drive and I followed the TrueNAS Scale install instructions to install TrueNAS Scale on the first NVMe drive. It only took a minute or two.

Once that was done I rebooted again, and started hitting the DEL key when it rebooted to get into the BIOS. This time I went to the Boot screen to change the primary boot device. One of the NVMe disks was now labeled “Debian”. That’s the TrueNAS disk so I selected that, then saved and exited.

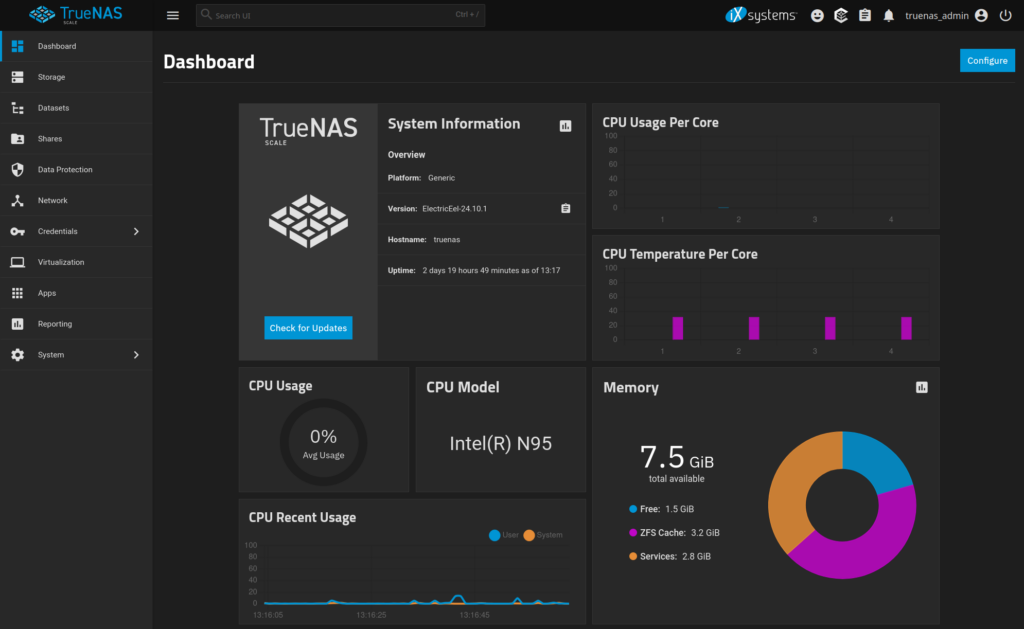

Once the NAS booted up the screen displayed the IP address and URL for logging in, so I went to my laptop and logged into the web UI to finish the setup. I added the 4x 8TB drives to a single RAIDZ1 storage pool and made the remaining 1TB NVMe drive into a R/W cache drive.

If I want to go back to TOS 6 I can re-enable the “UTOS Boot First” setting in BIOS, boot from the TOS6 flash drive, and rebuild the disk array. If I want to use the NVMe drive that TrueNAS is on for something else I can try installing TrueNAS on the TOS 6 flash drive but I’m not convinced that it will fit on a 3.75GB drive. I checked the size of the TrueNAS install and it looks like it might just barely fit.

Hope you find this useful.